Where are the humans in AI? was exhibited at the Carnegie Mellon School of Design‘s Design Center, Pittsburgh, on May 8–10 2018. Some of the projects will also be exhibited as part of Data & Society’s Future Perfect event in New York, June 7–8 2018.

Download our exhibition catalog

As artificial intelligence pervades our everyday lives, the services we use, and decisions made without our knowing, are starting to incorporate machine learning and data-driven algorithmic processes whether we realize it or not. We’re starting to deal with multiple ‘intelligences’, and boundaries between them may not be clear. But much AI has a human component—it’s being trained on us, or involves significant human labor behind the scenes, often hidden or marginalized. Our futures potentially involve not just ‘encountering’ AI, but ourselves becoming part of a system of intelligences, an environment of metacognition, with biases and hidden power structures, some familiar, some new.

We are going to have to think about things that think about how we think. Designers have the ability to provoke discussion, but also a responsibility to approach these areas with a critically informed stance. The projects we present here explore how interactions between human and nonhuman intelligences could reshape aspects of our future everyday environments, and through a process of speculative design, construct alternatives which aim to provoke reflection and offer possibilities.

Over the semester we are lucky enough to have been joined for talks and crits by Madeleine Elish (Data & Society), Simone Rebaudengo (Automato.farm), Deepa Butoliya (Carnegie Mellon, soon to be University of Michigan), Emily LaRosa (Carnegie Mellon), Professor David Danks (Carnegie Mellon), and Bruce Sterling and Jasmina Tešanović (Casa Jasmina).

EmotoAI: An AI Sidekick

Gautam Bose, Marisa Lu, Lucas Ochoa

Meet Emoto—a robotic system that gives a body to the AI on your phone. Nonverbal communication and cues are a rich and expressive medium that hasn’t yet been really explored by mainstream home assistants. Our project explores expressive motion, and a more intentional placemaking interaction system for the phone, as a contribution to the larger conversation regarding human-to-AI etiquette, and relationships to mobile devices.

More info: marisa.lu/emoto

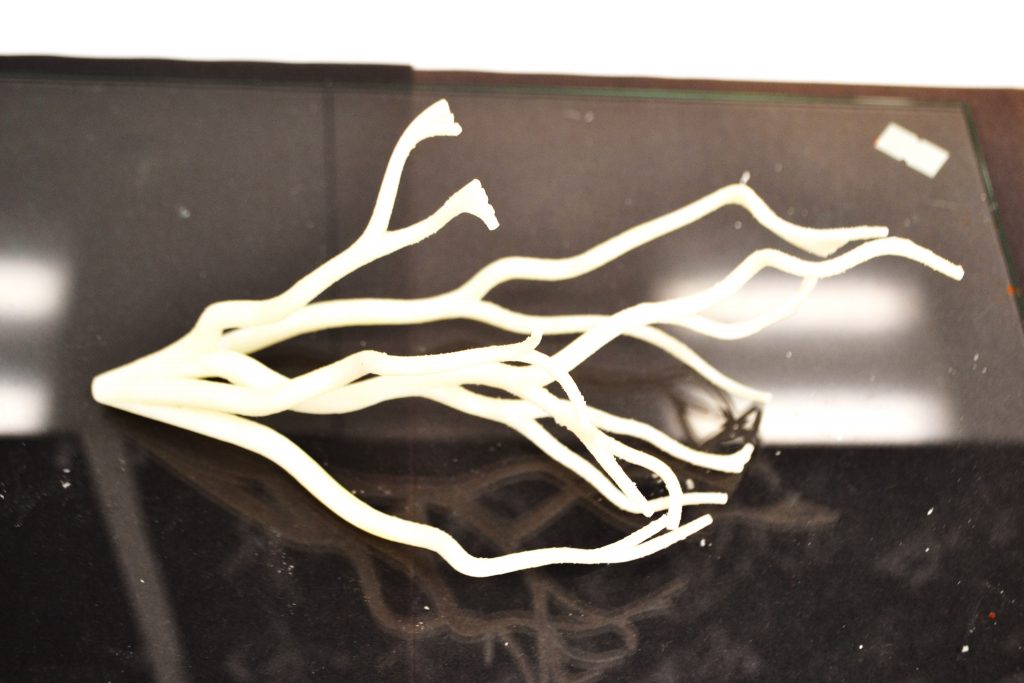

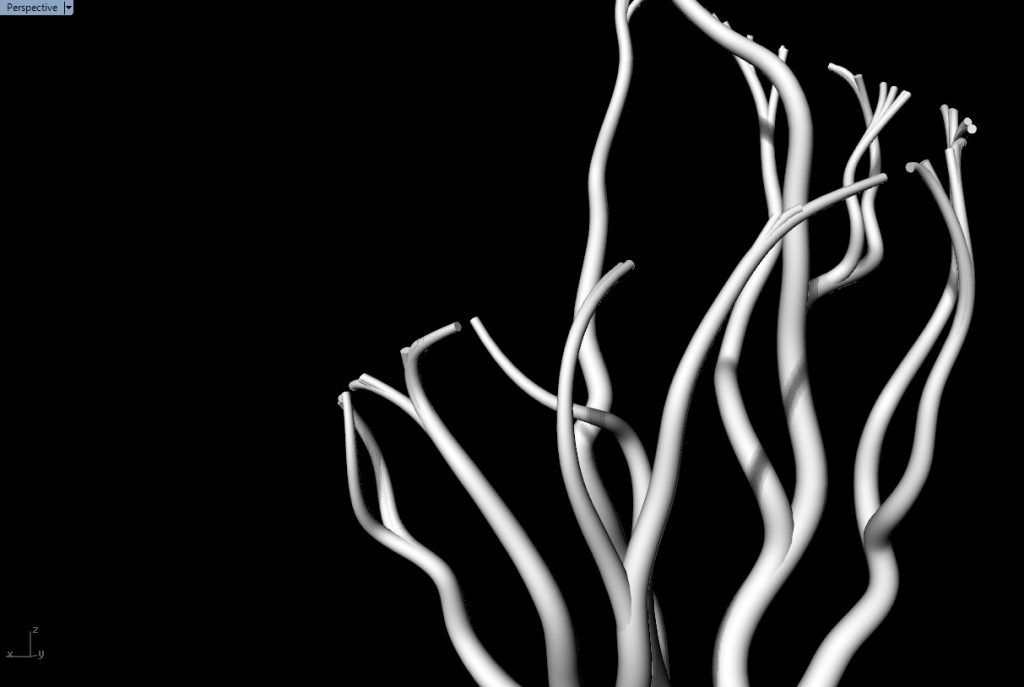

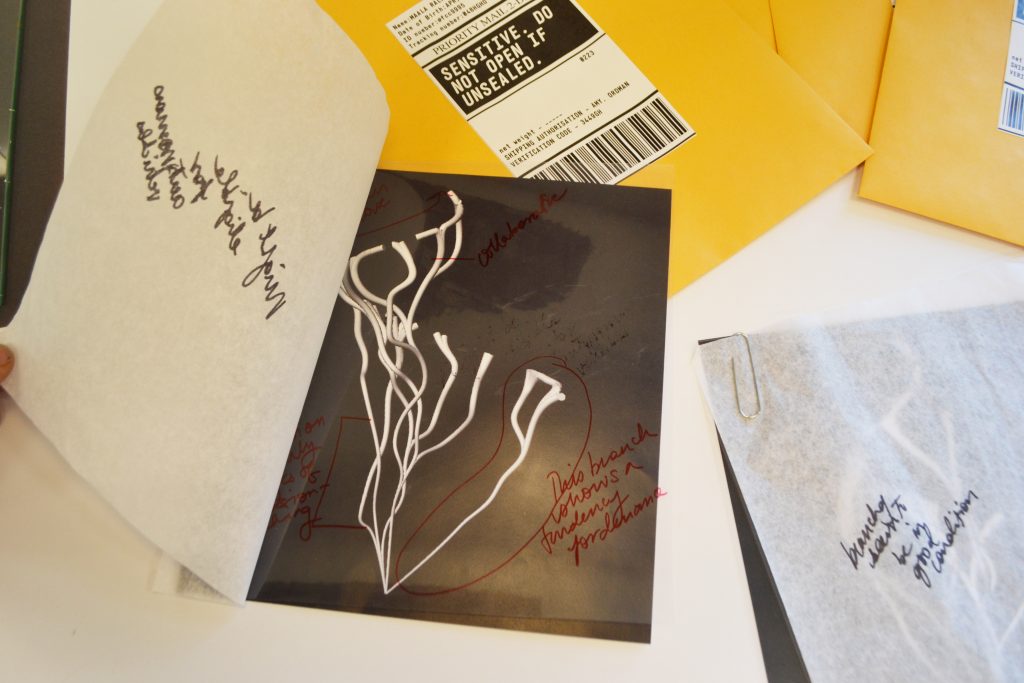

The Moral Code: Understanding Artificial Ethics and the Human Black Box

Aisha Dev

The Moral Code is a speculative data sculpture that grows based on the decisions you make through your lifetime and literally attempts to represent ‘where you’re coming from.’ This acts both as a meditative sculpture as well as an ethical footprint that could be used to connect you to your environment via AI systems and products. By plugging in your Moral Code you could embed your own ethical code into the technologies you use, increasing your agency over these systems. This sculpture attempts to democratise the ethics of future AI technologies and address the ‘moral crumple zone’ in unmanned systems.

More info: medium.com/@aishagdev

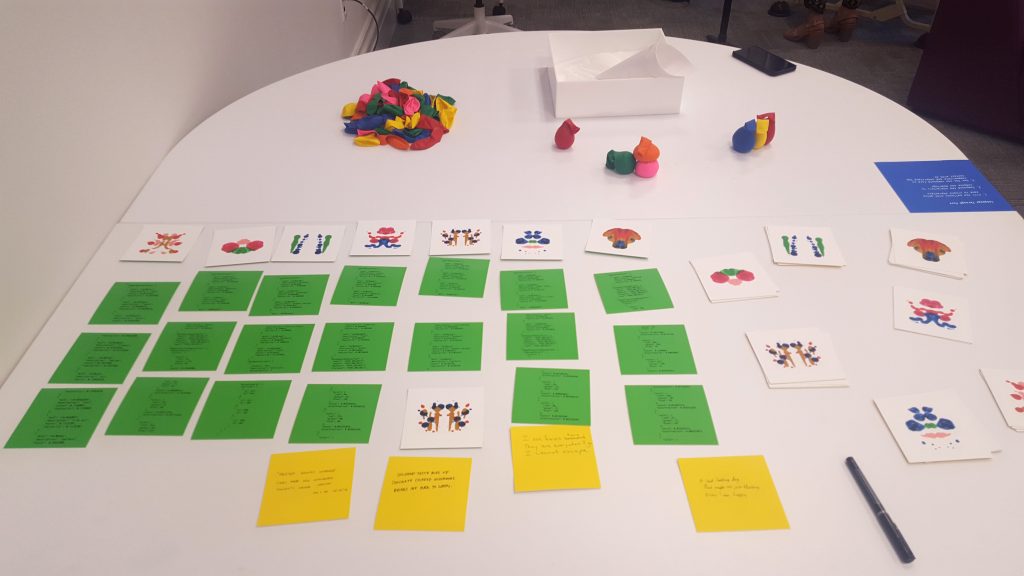

Poetic Language between Humans and Artificial Intelligence

Monica Huang

Communicating intangible emotions is challenging between people—even more so with AI.

What if humans could communicate with AI in humans’ terms, using metaphors, poems and phrases to contextually understand one and another?

More info: monica-huang.com/#/where-are-the-humans-in-ai-1

Utterance Artifacts: Interpretation and Translation in the Age of AI

Anna Gusman

Our voice is one of our first creative and collaborative tools. By posing this innate device as a touchpoint for human-computer interaction, people of many age demographics and bodily agency are able to access complex information systems and technologies. In these contexts, artificial intelligence plays a critical role in the way meaning is derived from verbal expression. This installation poses an AI system as a tool that uses acoustic, emotional and cultural properties of natural speech as a generative framework for interpretation and translation.

More info: www.anna-gusman.com and

medium.com/@agusman

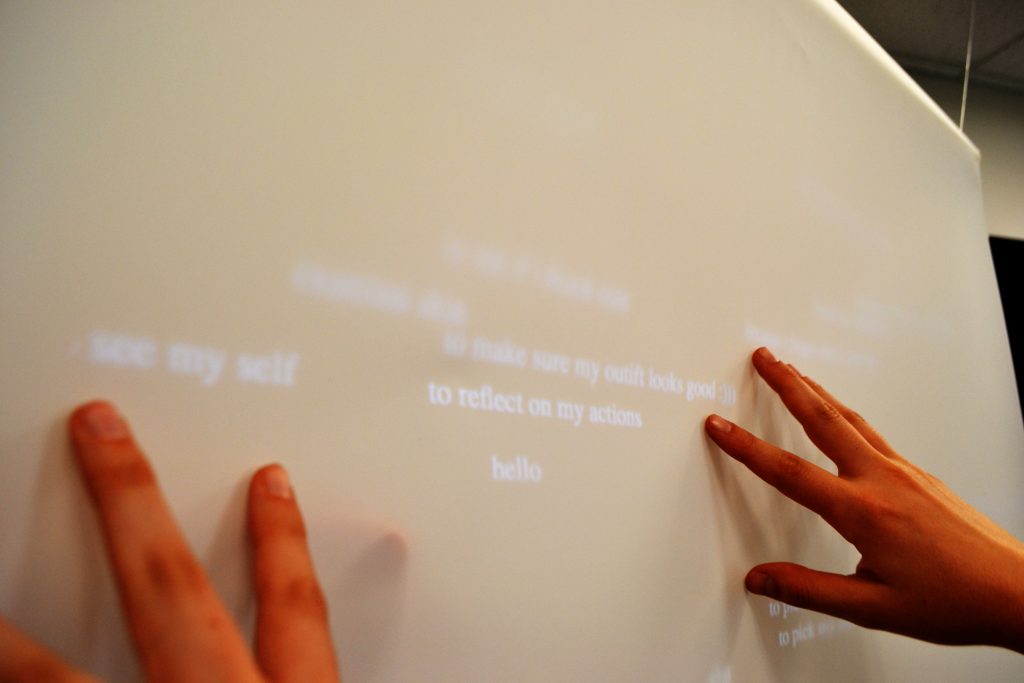

AI Dialogues and the Gaps in Identity

Emma Brennan

The curated algorithms that govern what we see online constantly adapt from our decisions. However, we can fail to realize that this dynamic influences our self-perception and actions; the framing of content can limit how we perceive the bigger picture. What are the gaps in an algorithm’s construction of your identity, and how would it create a more holistic picture of you? What are the collective identities of a community an algorithm uses to fill in the gaps?

More info: medium.com/@etbrenna

Google Home Mini – 2.0

Maayan Albert

Meet the next iteration of the Google Home Mini. An assistant that not only talks to you, but shows you what it’s thinking.

Prompt it with ‘Ok Google’ and ask it one of a set of questions. If you’re not satisfied with its answer, let it know by saying ‘try again’ or ‘next answer.’

More info: maayanalbert.com

Health AI™

Soonho Kwon & Jessica Nip

Health AI™ is a day-in-the-life of the health and data-centric future of 2050. It focuses on the user interactions of using Cook AI, while providing context of how data is collected and used across the government-regulated AI systems. This installation takes you through the bathroom, kitchen and dining spaces of 2050, where you experience a glimpse of how health data can affect behavior through augmented interactions.

More info: www.soonhokwon.com and www.jessica-nip.com

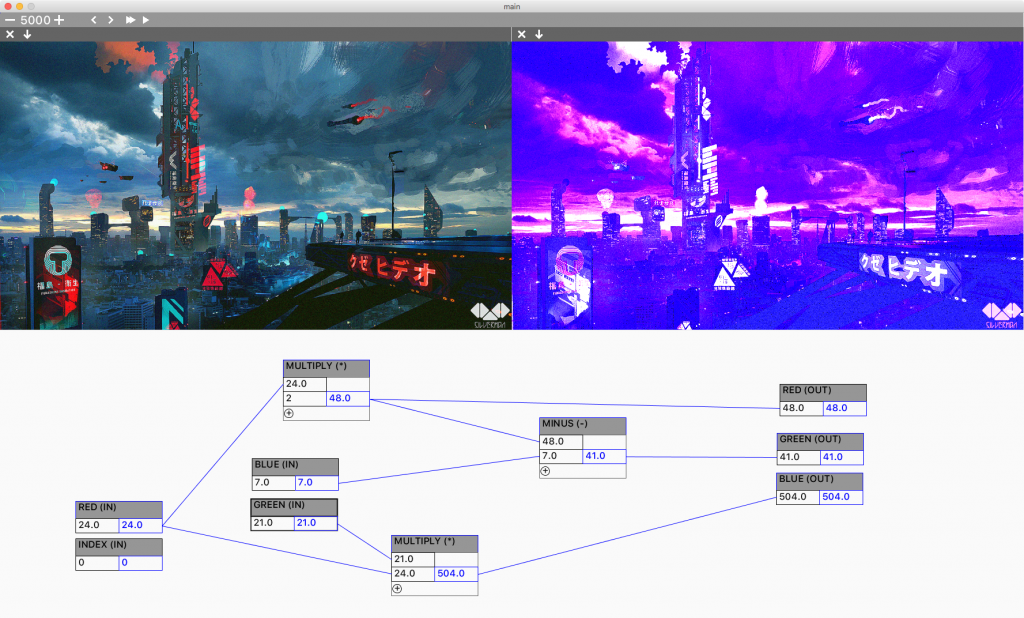

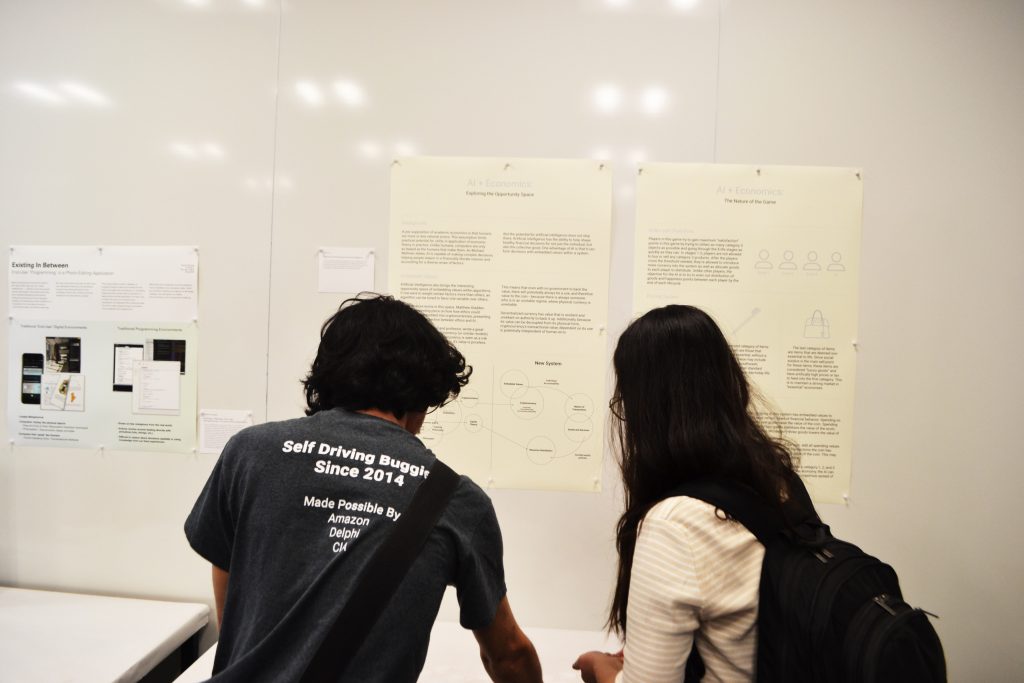

Existing In Between: End-User ‘Programming’ in a Photo-Editing Application

Cameron Burgess

Most computational environments (GUIs, CUIs, etc.) are ‘totally metaphorical’—the abstractions make many tasks easy, but rarely allow end-users to define their own task-spaces. Moreover, developers must contort their minds to build metaphors end-users take for granted. This project seeks to exist in between, a programming/pure-data environment for end-users to struggle with something (i.e. increasing brightness) usually taken for granted in a regular application. It’s also a call to make end-user environments more transparent and enable us to rearrange, redesign, and reprogram our digital environments.

More info: cameron-burgess.com/Blog

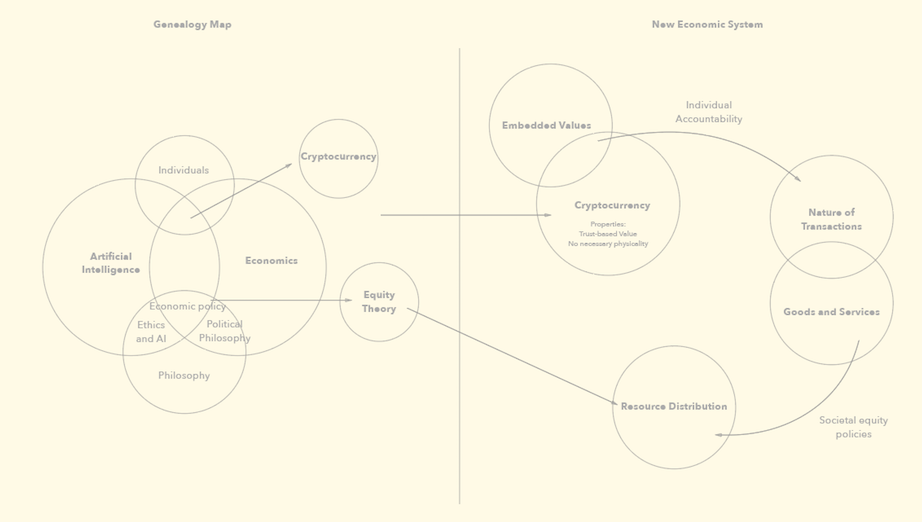

Ethical Economics and Accountable AI

Helen Wu

Artificial intelligence has the potential to form healthy financial decisions for not just the individual, but regulate markets on a societal level as a true “rational actor.” Combined with spending incentives and a consumerist credit system, explore the advantages and disadvantages with this board game.

About the course

Environments Studio IV: Intelligence(s) in Environments, a course for third-year undergraduates taking Carnegie Mellon School of Design’s Environments Track, ran for the second time in Spring 2018. In this studio, we explore design, behavior, and people’s understanding, in physical, digital, and hybrid environments, with a specific focus on intelligence of different kinds, from social interaction to artificial intelligence. The course comprises practical projects and guest talks, focused on investigating, understanding, and materializing intelligence and other invisible and intangible qualitative phenomena and relationships, through new forms of probe, prototype, and speculative design. Instructor: Dan Lockton

Acknowledgements

Exhibition visual identity by Jessica Nip. Door vinyls by Marisa Lu.

Thank you to all our guest speakers (named above), to Jane Ditmore, Darlene Scalese, Tom Hughes, Golan Levin, Deborah Wilt, Ray Schlachter, Joe Lyons, Peter Scupelli, and to Terry Irwin for enabling us to use the Design Center space. And to Bella for visiting when some of us needed it.

Download our exhibition catalog

Pingback: Imaginaries Lab review of the year: 2018 | Imaginaries Lab | Dr Dan Lockton