We often hear the phrase ‘intelligent environments’ used to describe spaces in which technology is embedded, in the form of sensors, displays, and computational ability. This might be related to Internet of Things, conversational interfaces or emerging forms of artificial intelligence.

But what does ‘intelligence’ mean? There is a long history of attempts to create artificial intelligence — and even to define what it might mean — but the definitions have evolved over the decades in parallel with different models of human intelligence. What was once a goal to produce ‘another human mind’ has perhaps evolved into trying to produce algorithms that claim to ‘know’ enough about how we think to be able to make decisions about us, and our lives. What we have now in ‘intelligent’ or ‘smart’ products and environments is one particular view of intelligence, but there are others, and from a design perspective, designing our interactions with those ‘intelligences’ as they evolve is likely to be a significant part of environments design in the years ahead. Is there an opportunity for designers to explore different kinds of interactions, different theories of mind, or to envisage new forms of intelligence in environments, beyond the dominant current narrative?

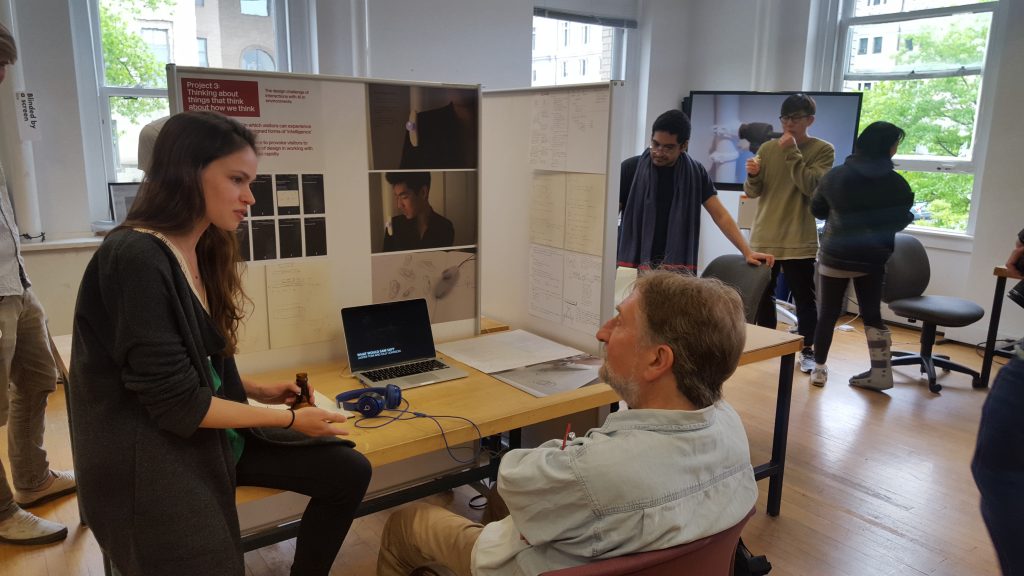

Building on the first two projects’ treatment of how humans use environments, and how invisible phenomena can be materialized, for this project the brief was to create an environment in which visitors can experience different forms of ‘intelligence’, through interacting with them (or otherwise experiencing them). The project was not so much about the technical challenges of creating AI, but about the design challenges of enabling people to interact with these systems in everyday contexts. So, quick prototyping and simulation methods such as bodystorming and Wizard of Oz techniques were entirely appropriate—the aim was to provide visitors to to the end-of-semester exhibition (May 4th, 2017) with an experience which would make them think, and provoke them to consider and question the role of design in working with ‘intelligence’.

More details, including background reading, in the syllabus.

We considered different forms of behaviour, conversation, and ways of thinking that we might consider ‘intelligent’ in everyday life, from being knowledgeable, to being able to learn, to solving problems, to knowing when not to appear knowledgeable, or not to try to solve problems. If one is thinking about how others are thinking, when is the most intelligent thing to do actually to do nothing? Much of what we considered intelligent in others seemed to be something around adaptability to situations, and perhaps even adaptability of one’s theory of mind, rather than behaving in a fixed way. We looked at Howard Gardner’s multiple intelligences, with the ideal of interpersonal, or social, intelligence being one which seemed especially interesting from a design and technological point of view — more of a challenge to abstract into a set of rules than simply demonstrating knowledge, a condition where the feedback necessary for learning may not itself be clear or immediate, and where the ability to adjust the model assumed of how other people think is pretty important. How could a user give social feedback to a machine? Should users have to do this at all?

Each of the three resulting projects considers a different aspect of ‘intelligence’ from the perspective of people’s everyday interaction with technologies in the emotionally- and socially-charged context of planning a party or social gathering, and some of the issues that go with it.

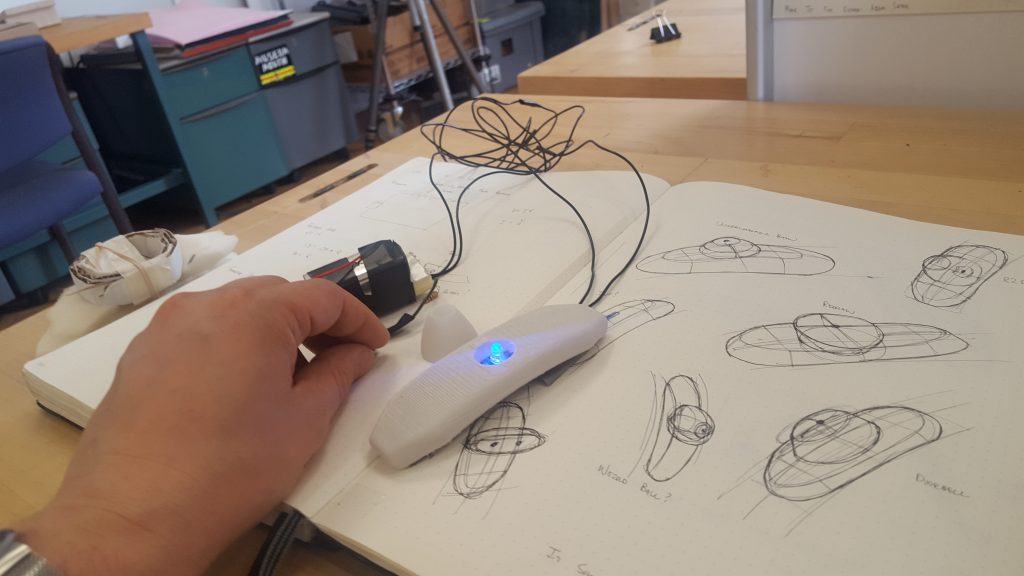

Gilly Johnson and Jasper Tom‘s SAM is an “intelligent friend to guide you through social situations”, planning social gatherings through analysing interaction on social networks, but which also has Amazon Echo-like ordering ability. It’s eager to learn—perhaps too eager.

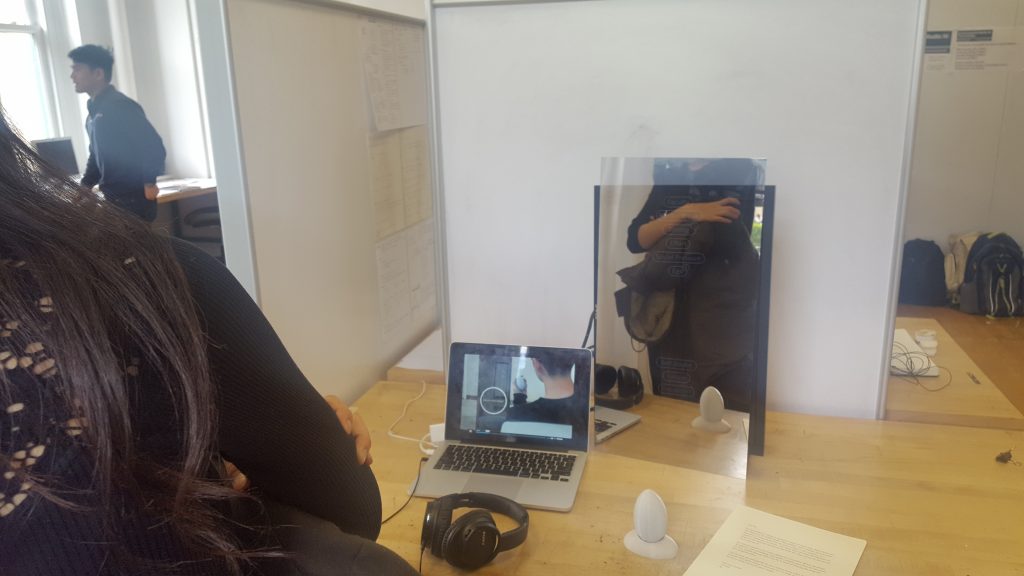

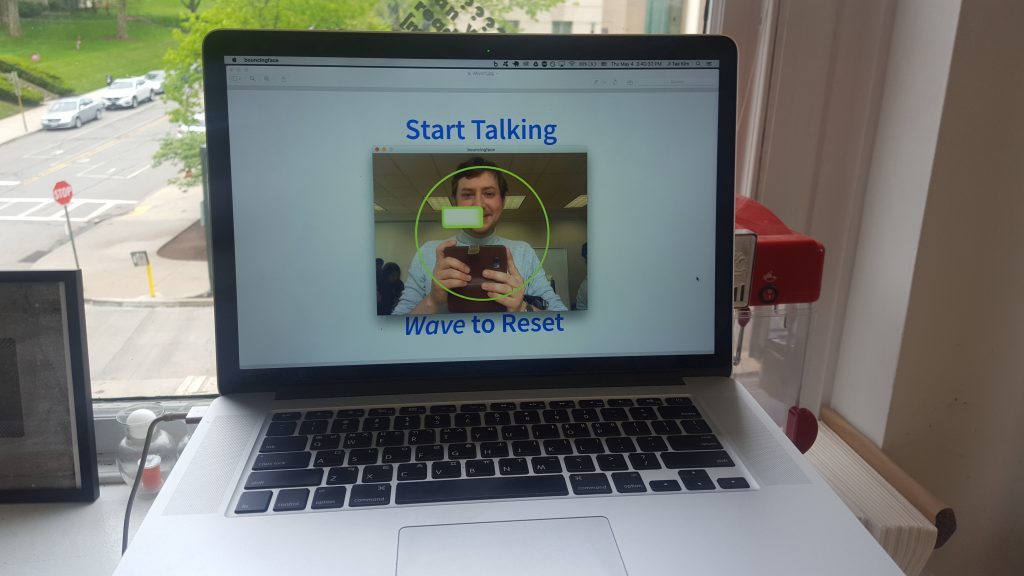

Ji Tae Kim and Ty Van de Zande‘s Dear Me, / Miyorr takes the idea that sometimes intelligence can come from not saying anything — from listening, and enabling someone else to speak and articulate their thoughts, decisions, worries, and ideas (there are parallels with the idea of rubber-duck debugging, but also ELIZA). In this case, the system is a kind of magic mirror that listens, extracts key phrases or emphasised or repeated ideas, and (in conjunction with what else it knows about the user), composes a “letter to oneself” which is physically printed and mailed to the user. Ty and Ji Tae also created a proof-of-principle demo of annotated speech-detection that could be used by the mirror.

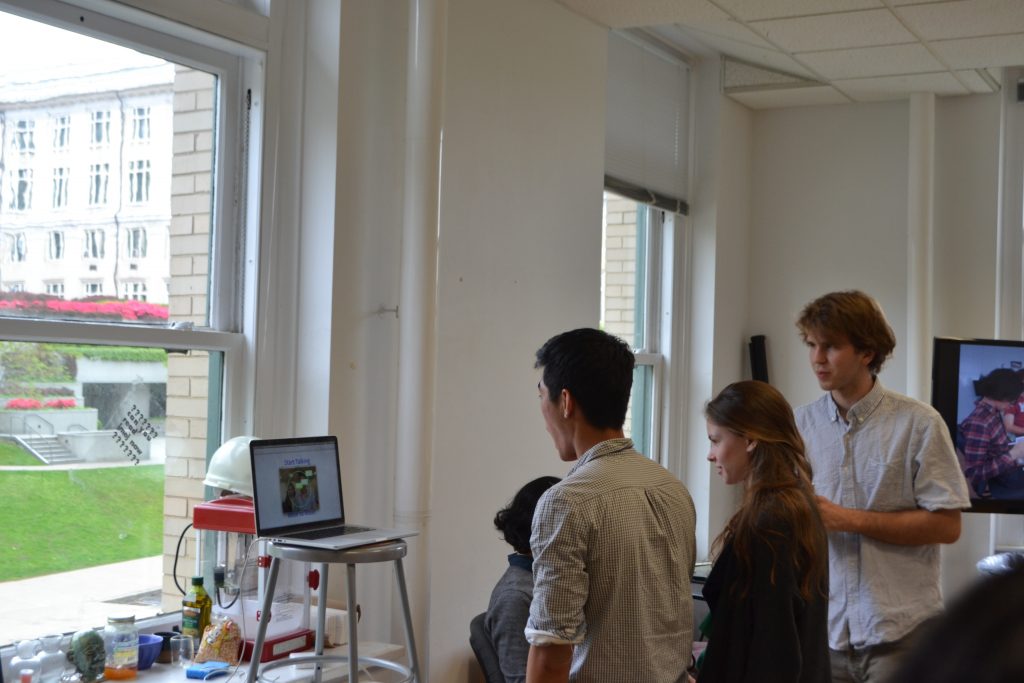

Chris Perry‘s Dialectic is an exploration of the potential of discourse as part of decision-making: rather than a single Amazon Echo or Google Home-type device making pronouncements or displaying its ‘intelligence’, what value could come from actual discussion between devices with different perspectives, agendas, or points of view? What happens if the human is in the loop too, providing input and helping direct the conversation? If we were making real-world decisions, we would often seek alternative points of view—why would we not want that from AI?

Chris’s process, as outlined in the demo, aims partly to mirror the internal dialogue that a person might have. Pre-recorded segments of speech from two devices (portrayed by paper models) are selected from (‘backstage’) by Chris, in response to (and in dialogue with) the user’s input. There are parallels with “devices talking to each other” demos, but most of all, the project reminds me of a particular Statler and Waldorf dialogue. In the demo, the devices are perhaps not seeking to “establish the truth through reasoned arguments” but rather to help someone order pizza for a party.

Pingback: Fictions Matter Too: A Vision for an Imaginaries Lab in Design | Imaginaries Lab | Carnegie Mellon School of Design

Pingback: Fictions Matter Too: A Vision for an Imaginaries Lab in Design | Imaginaries Lab | Dr Dan Lockton

Pingback: Thinking About Things That Think About How We Think | Imaginaries Lab | Dr Dan Lockton